Are you encountering issues with indexing on your Blogger website? By following this guide, you will be equipped to address crawling and indexing problems by thoroughly examining each setting. In this article, I will explain the process of setting up custom robots.txt files, homepage and post page tags, meta tags, search console settings, and more.

Understanding Crawling

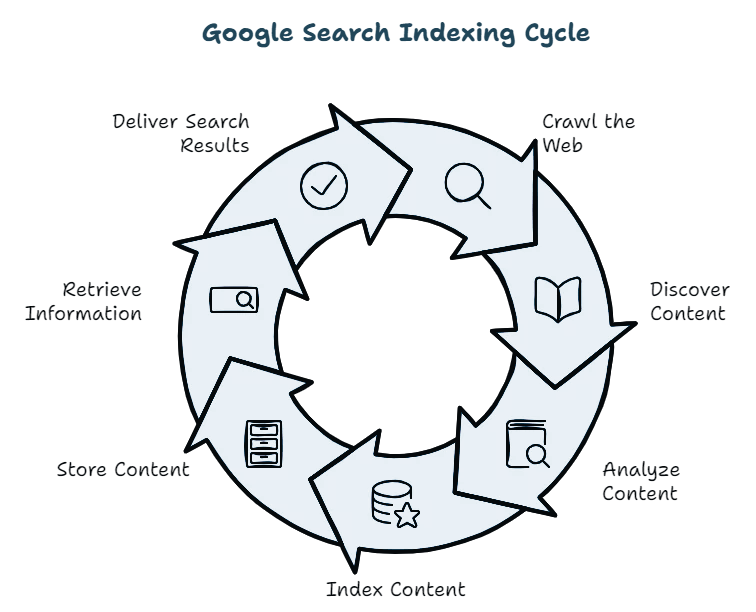

Crawling involves search engine bots, such as Google bots, visiting a website to determine its content. This process enables search engines to comprehend the content and include it in their database for indexing.

Understanding Search Engine Indexing

Indexing is the process through which search engines organize information to provide quick responses to user queries. Search engines store the data gathered during crawling on their servers, organizing it to effectively respond to various queries and display only relevant search results.

Search Engine Ranking

Following the analysis of the indexed data, search engines prioritize displaying relevant websites in search engine result pages (SERPs). Rankings are influenced by numerous factors like relevance, page rank, website authority, backlinks, and many more, with Google incorporating over 200 ranking factors to ensure accurate results.

Resolving crawling and indexing issues can be complex and depend on various factors. Below, I will outline some settings to help mitigate these problems.

Let’s commence with the Blogger settings.

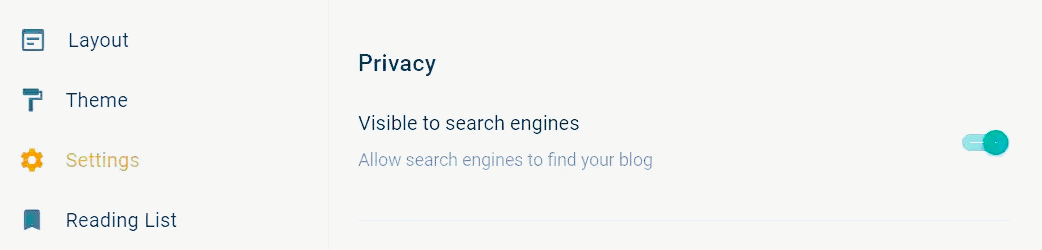

1. Privacy Settings

Navigate to the Blogger dashboard and access the Settings tab. Locate the Privacy option and activate the “Visible to search engines” setting. If this setting is disabled, the web pages will not get indexed.

2. Crawlers and Indexing Settings

Scroll to the crawlers and indexing settings and enable the custom robots.txt option.

To add the robots.txt file to your website, follow the format below and replace the placeholder with your website URL.

User-agent: * Disallow: /search Disallow: /category/ Disallow: /tag/ Allow: / Sitemap: https://www.example.com/sitemap.xml Sitemap: https://www.example.com/sitemap-pages.xml Sitemap: https://www.example.com/atom.xml?redirect=false&start-index=1&max-results=500

If your website contains more than 500 pages, you can include multiple sitemaps. Simply add an extra line of code after the previous one.

Sitemap: https://example.com/atom.xml?redirect=false&start-index=501&max-results=500

This method allows you to include up to 1000 pages in your Blogger XML sitemap, addressing any indexing concerns.

Utilize this Robots.txt Generator tool to effortlessly create the file for your Blogger website. Enable the “custom robots header tags” option and configure the following 3 header tags:

-

For the homepage tags, select the “all” and “noodp” options, then save the settings.

-

Next, for archive and search page tags, choose the “noindex” and “noodp” options, and save.

-

Finally, in Post and page tags, select the “all” and “noodp” options, and save the changes.

3. Submitting the Sitemap in Search Console

Proceed to submit your sitemap on Search Console. If you do not have an account, create one and verify your domain.

Access the sitemap option on the search console, input the sitemap URL in the designated format, and click submit.

https://www.example.com/sitemap.xml

Upon submission, Google will index your sitemap. Additionally, consider submitting your sitemap on the Bing Webmaster Tool or linking your search console with it. Post-submission, your website will be automatically crawled by search engine bots for indexing.

Some websites encounter crawling issues, which can arise from crawling budget limitations or redirecting concerns. To resolve the crawl budget issue, ensure you update your website regularly and publish articles frequently.

Additionally, you can manually submit your blog URL using the URL inspection tool in the search console. Paste the link of the newly published article and request indexing. Google will then prioritize crawling your website within a short period.

How to Prevent Indexing and Crawling Issues

There are various methods to prevent indexing and crawling issues on your website, allowing search engines to index your pages more efficiently:

-

Publish articles regularly and update existing content.

-

Emphasize interlinking articles for easier discovery by search engines.

-

Share articles on social media for initial traffic to the pages.

-

Rectify broken internal links.

-

Address redirect loops that occur when two pages redirect to each other.

-

Improve page loading speed.

-

Resolve duplicate pages problems.

-

Utilize HTML sitemaps in Blogger.

Conclusion

You have now learned how to address crawling and indexing issues on your Blogger website. Ensure to review the robots.txt file and meta tags correctly and follow the mentioned best practices. If you encounter any crawling or indexing challenges, feel free to share in the comments section.

Read Also: A Comprehensive Guide: Starting a Blog on Blogger